Building a meta-jobboard

Posted March 22, 2025; last updated April 03, 2025

I’m currently looking for an apprenticeship for next september (btw, if you’re looking for an apprentice for a 3-years contract, contact me!). This is a very annoying process, with javascript-heavy sites hiding at every corner, forms that erroneously autofill the fields from your resume with 100% confidence, and a dozen jobboards to check, all with varying levels of user-friendlyness and general quality. And who could forget the delight of being ignored 99% of the time!

For today, however, I would like to focus only on the job boards, and specifically, on how I got rid of them in my search for an apprenticeship.

Jobboards fucking suck

Or sometimes they don’t. But generally, they do.

What infuriates me the most about jobboards (which are sites like linkedin, indeed, welcometothejungle, hellowork, etc.) is not the bloatedness of some (looking at you, linkedin), or the fact that I have to look at multiple different interfaces per day. What really gets me is having to sift through dozens of jobs that are either completely irrelevant to my search, are wanna-spend-8-hours-in-front-of-microsoft-excel jobs, or are improperly labeled (wrong location, contract type or duration, etc.).

Each one of these jobboards has their own unique and quirky search engines that seem to try to drown the good job listings in a pile of terrible ones. Now, repeat that sifting times n, n being the number of jobboards you want to inflict upon yourself.

| Observation | This is a stupid, mind-numbing task which I hate doing |

|---|---|

| Principle | I am a programmer, one who automates stupid, mind-numbing tasks that I hate doing |

| Conclusion | This task will be automated |

Tools of Choice

For this “weekend project” (as I like calling them, regardless of which day of the week it is), I assembled a tech stack that aligned with my preferences and the project’s needs:

- Node.js: Because I genuinely enjoy JavaScript and I’m comfortable with it. (Choosing a tech you actually like makes development much easier)

- TypeScript: Because coding with raw JavaScript types (read: no typing) sometimes causes headaches that type safety helps prevent, especially when creating more “abstract” functions. Plus, I’m just accustomed to TypeScript now.

- Playwright: Years ago, I used Selenium to build an Instagram post scraper with dreams of running a fully automated page (spoiler: it didn’t amount to much). Playwright is “Selenium but better” — the cool kid on the block that preemptively solves common issues, like waiting for events to complete before moving on.

- InstructorJS and Zod: These make LLM output predictable by specifying a JSON schema and forcing the LLM to adhere to that structure. They provide a layer of type safety protection over the otherwise unpredictable outputs of LLMs.

Collecting Data: The “Fun” Part, aka scraping

For each job board, my process looks like this:

- Navigate to the jobs listing page

- Open my developer console and hunt for specific HTML/CSS identifiers (an id, a data-testid, an aria-label) that I can use to select job offers programmatically

- Visit a random job listing and examine its HTML structure

- Identify which element(s) contain the job details I need. I generally don’t bother with handpicking the specific title, location, description elements etc. I just find the element wrapping the whole job ad.

Using Playwright’s (very intuitive) API, I write down the “algorithm” as a literal series of instructions of “where to click and what to read” to collect text from each page.

During this process, I discovered that many job boards embed machine-readable versions of their listings so that “good” (wanted) scrapers like Google’s Googlebot can better understand the content and potentially boost the page in search rankings. Fortunately for me, this also makes my scraping job much easier, even if my scraper probably isn’t on their list of welcome visitors.

Both the raw text and any available machine data are saved for processing in the next step.

The Eternal Fight Against Cloudflare, or Why I Used “Patchright”

Cloudflare’s anti-bot protection proved to be a significant obstacle, blocking many of my requests on certain sites. To circumvent this, I had to resort to a patched version of Playwright called “patchright”, which is a drop-in replacement using the chrome_undetected driver.

Additionally, I had to run the scraper with a visible browser window instead of headless mode (“no visible window” mode), as headless browsers instantly trigger Cloudflare’s defenses. This wasn’t a major inconvenience since I use multiple virtual desktops anyway and could simply place the Chrome window on a desktop I wasn’t actively using.

Structuring and Correcting Raw Job Listings

The machine-readable data embedded in job listings often contains errors. I’ve seen positions listed as “10-12 months” that are actually flexible 1-3 year positions. The unstructured text generally contains more accurate information.

My solution: provide both sources to an LLM and force a structured output. This structured data includes the actual job title, technologies used (so I can quickly determine if an “engineering” job means actual engineering or just making Excel spreadsheets for 8 hours a day), company name, location (also frequently mislabeled), and more.

[!Note] Among the LLMs I tested, Claude 3.7 Sonnet was undoubtedly the best, with a 100% success rate (or 0% error rate) in structuring the data. Unfortunately, it’s also the most expensive LLM I tried, which quickly burned through my account balance.

After processing, each structured job listing is saved to a dedicated folder on my disk, with each file containing information for a single position.

Vibe-Coding a GUI for the Job Listings

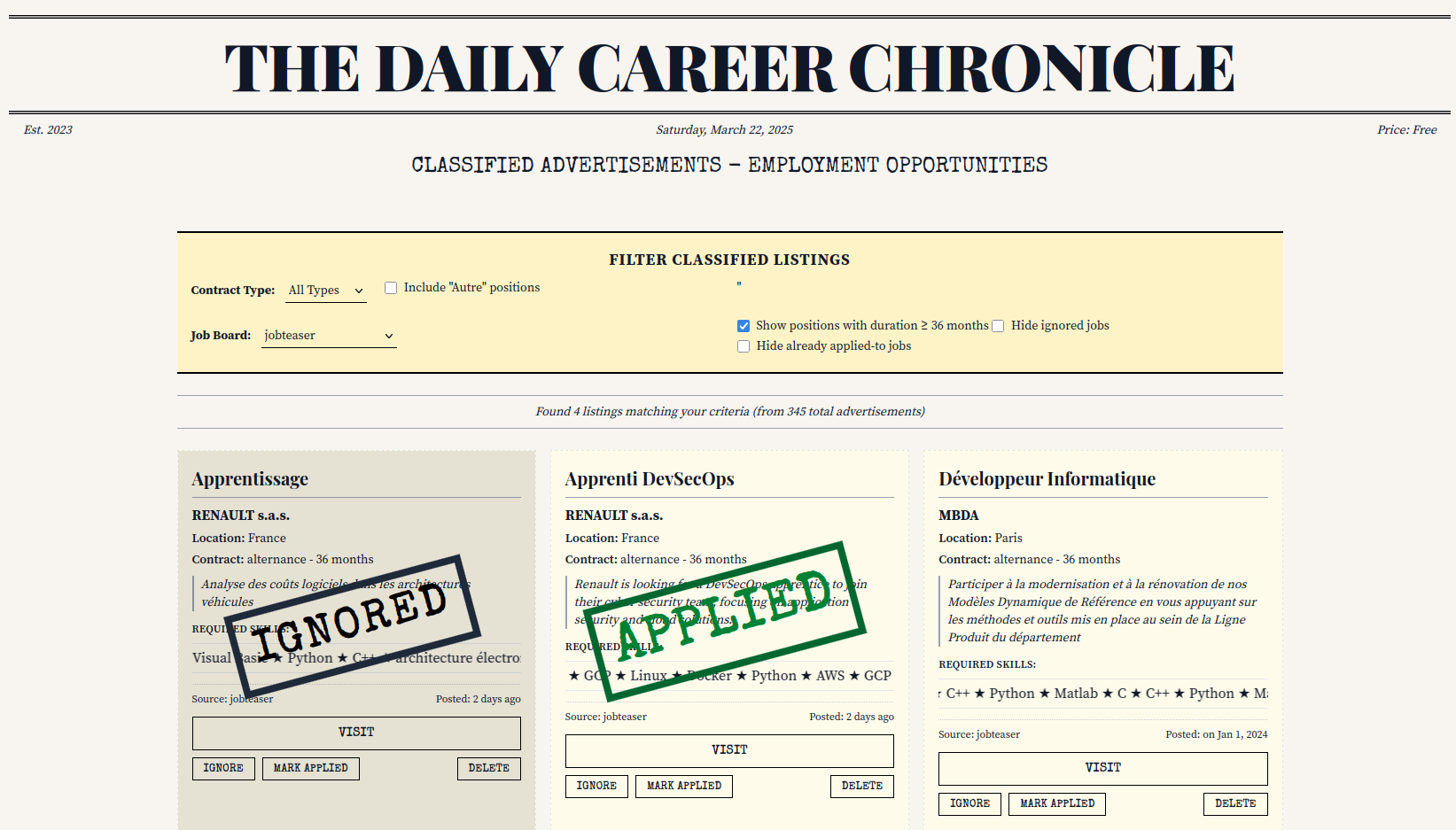

With my data collection and processing pipeline complete, I needed an interface to display the results. Wanting to create something different from my previous projects (cafeduvesper.net and rockpapersimmer.com), I decided to style it like a newspaper classified ads section.

However, implementation promised to be an uninteresting, error-prone task. I knew exactly how to do it, but it would still have taken several hours just to display JSON data in an aesthetically pleasing HTML page.

Since all the “cool kids on twitter” were apparently experimenting with “vibe-coding” (prompting an LLM to code applications instead of writing the code yourself) I decided to give it a try. I already had a CGI lighttpd server set up (see my article on CGI for details, but essentially it lets me type http://localhost:19786/jobs.cgi, and the server runs the script and returns whatever it outputs. Trivial to set up, and perfect for quick personal UI apps).

Python, being THE “can-do-anything-out-of-the-box” language that everyone (including LLMs but excluding me) loves, seemed like the natural choice. I provided a sample of six structured job listings to the LLM and requested a newspaper ads section layout.

[!Note] This choice contradicts my earlier “use what you know and like” principle, and I’m still not entirely sure why I went with Python. I think I assumed it would be easier for the LLM, and frankly, I didn’t feel like correcting its code anyway.

After several rounds of prompting, thinking, testing, and copying the entire script back-and-forth into the prompts, I arrived at a result I genuinely love:

The interface includes buttons to:

- View the original job listing on the source website

- “Ignore” listings I don’t want to apply for but want to mark as processed

- “Mark applied” for positions I’ve submitted applications to

- “Delete” to remove the underlying file entirely (in case something goes wrong, as I don’t want to go fish for the specific file)

While Claude wrote most of the UI code (all but a dozen lines tops), the original ideas such as the newspaper classified ads aesthetic, marquees for technologies, the buttons, and the “IGNORED” and “APPLIED” stamps, are all mine. The scraper is all handwritten, and I could not even use claude for a part of it (as I had burned through my balance, as previously mentioned). So I’m still quite pleased with my work.

This project has significantly streamlined my internship search process and was genuinely enjoyable to build!